Building an Anycast Secondary DNS Service

Regular readers will remember how one of my friends offered to hold my beer while I went and started my own autonomous system, and then the two of us and some of our friends thought it would be funny to start an Internet Exchange, and that joke got wildly out of hand... Well, my lovely friend Javier has done it again.

About 18 months ago, Javier and I were hanging out having dinner, and he idly observed in my direction that he had built a DNS service back in 2009 that was still mostly running, but it had fallen into some disrepair and needed a bit of a refresh. Javier is really good at giving me fun little projects to work on... that turn out to be a lot of work. But I think that's what hobbies are supposed to look like? Maybe? So here we are.

With Javier's help, I went and built what I'm calling "Version Two" of Javier's NS-Global DNS Service.

Understanding Secondary DNS

Before we dig into NS-Global, let's talk about DNS for a few minutes. Most people's interaction with DNS is that they configure a DNS resolver like 1.1.1.1 or 9.9.9.9 on their network, and everything starts working better, because DNS is really important and a keystone to the rest of the Internet working.

And these resolving DNS services aren't what we built. Those are really kind of a pain to manage, and Cloudflare will always do a better job than us at marketing since they were able to get a pretty memorable IP address for it. But the resolving DNS services need somewhere to go to get answers for their user's questions, and that's where authoritative DNS servers come in.

When you ask a recursive DNS resolver for a resource record (like "A/IPv4 address for www.twitter.com") they very well may know nothing about Twitter, and need to start asking around for answers. The first place the resolver will go is one of the 13 root DNS servers (which I've talked about before), and ask them "Hey, do you know the A record for www.twitter.com?", and the answer back from the root DNS server is going to be "No, I don't know, but the name servers for com are over there". And that's all the root servers can do to help you; point you in the right direction for the top level domain name servers. You then ask one of the name servers for com (which happen to be run by Verisign) if they know the answer to your question, and they'll say no too, but they will know where the name servers for twitter.com are, so they'll point you in that direction and you can try asking the twitter.com name servers about IN/A,www.twitter.com, and they'll probably come back with the answer you were looking for the whole time.

So recursive DNS resolvers start at the 13 root servers which can give you an answer for the very last piece of your query, and you work your down the DNS hierarchy moving right to left in the hostname until you finally reach an authoritative DNS server with the answer you seek. And this is really an amazing system that is so scalable, because a resolver only critically depends on knowing the IP addresses for a few root servers, and can bootstrap itself into the practically infinitely large database that is DNS from there. Granted, needing to go back to the root servers to start every query would suck, so resolvers will also have a local cache for answers, so once one query yields the NS records for "com" from a root, those will sit in the local cache for a while and save the trip back to the root servers for every following query for anything ending in ".com".

But we didn't build a resolver. We build an authoritative DNS service, which sits down at the bottom of the hierarchy with a little database of answers (called a zone file) waiting for some higher level DNS server to refer a resolver to us for a query only we can answer.

Most people's experience with authoritative DNS is that when they register their website with Google Domains or GoDaddy, they have some kind of web interface where you can enter IP addresses for your web server, configure where your email for that domain gets sent, etc, and that's fine. They run authoritative DNS servers that you can use, but for various reasons, people may opt to not use their registrar's DNS servers, and instead just tell the registrar "I don't want to use your DNS service, but put these NS records in the top level domain zone file for me so people know where to look for their questions about my domain"

Primary vs Secondary Authoritative

So authoritative DNS servers locally store their own little chunk of the enormous DNS database, and every other DNS server which sends them a query either gets the answer out of the local database, or a referral to a lower level DNS server, or some kind of error message. Just what the network admin for a domain name is looking for!

But DNS is also clever in the fact that it has the concept of a primary authoritative server and secondary authoritative servers. From the resolver's perspective, they're all the same, but if you only had one DNS server with the zone file for your domain and it went offline, that's the end of anyone in the whole world being able to find any IP addresses or other resource records for anything in your domain, so you really probably want to recruit someone else to also host your zone to keep it available while you're having some technical difficulties. You could stand up a second server and make the same edits to your zone database on both, but that'd also be a pain, so DNS even supports the concept of an authoritative transfer (AXFR) which copies the database from one server to another to be available in multiple places.

This secondary service is the only thing that NS-Global provides; you create your zone file however you like, hosted on your own DNS server somewhere else, and then you allow us to use the DNS AXFR protocol to transfer your whole zone file into NS-Global, and we answer questions about your zone from the file if anyone asks us. Then you can go back to your DNS registrar and add us to your list of name servers for your zone, and the availability/performance of your zone improves by the fact that it's no longer depending on your single DNS server.

The Performance of Anycast

Building a secondary DNS service where we run a server somewhere, and then act as a second DNS server for zones is already pretty cool, but not stupid enough to satisfy my usual readers, and this is where anycast comes in.

Anycast overcomes three problems with us running NS-Global as just one server in Fremont:- The speed of light is a whole hell of a lot slower than we'd really like

- Our network in Fremont might go offline for some reason

- With enough users (or someone attacking our DNS server) we might get more DNS queries than we can answer from a single server

And anycast to the rescue! Anycast is where you have multiple servers world-wide all configured essentially the same, with the same local IP address, then use the Border Gateway Protocol to have them all announce that same IP address into the Internet routing table. By having all the servers announce the same IP address, to the rest of the Internet they all look like they're the same server, which just happens to have a lot of possible options for how to route to it, so every network in the Internet will choose the anycast server closest to them, and route traffic to NS-Global to that server.

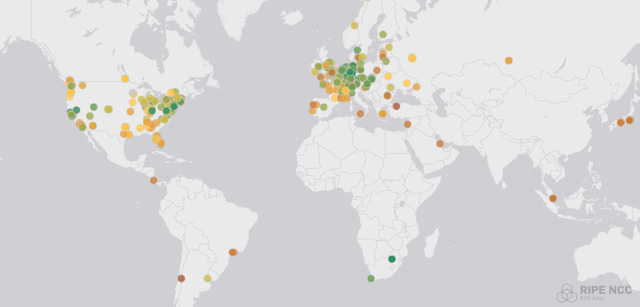

Looking at the map above, I used the RIPE Atlas sensor network (which I've also written about before) to measure the latency it takes to send a DNS query to a server in Fremont, California from various places around the world. Broadly speaking, you can see that sensors on the west coast are green (lower latency) in the 0-30ms range. As you move east across the North American continent, the latency gets progressively worst, and as soon as you need to send a query to Fremont from another continent, things get MUCH worse, with Europe seeing 100-200ms of latency, and places like Africa feeling even more pain with query times in the 200-400ms range.

And the wild part is that this is a function of the speed of light. DNS queries in Europe just take longer to make it to Fremont and back than a query from the United States.

But if we instead deploy a dozen servers worldwide, and have all of them locally store all the DNS answers to questions we might get, and have them all claim to be the same IP address, we can beat this speed of light problem.Networks on the west coast can route to our Fremont or Salt Lake City servers, and that's fine, but east coast networks can decide that it's less work to route traffic to our Reston, Virginia or New York City locations, and their traffic doesn't need to travel all the way across the continent and back.

In Europe, they can route their queries to our servers in Frankfurt or London, and to them it seems like NS-Global is a Europe-based service because there's no way they could have sent their DNS query to the US and gotten an answer back as soon as they did (because physics!) We even managed to get servers in San Palo, Brazil; Johannesburg, South Africa; and Tokyo, Japan.

So now NS-Global is pretty well global, and in the aggregate we generally tend to beat the speed of light vs a single DNS server regardless of how well connected it is in one location. Since we're also using the same address and the BGP routing protocol in all of these locations, if one of our sites falls off the face of the Internet, it... really isn't a big deal. When a site goes offline, the BGP announcement from that location disappears, but there's a dozen other sites also announcing the same block, so maybe latency goes up a little bit, but the Internet just routes queries to the next closest NS-Global server and things carry on like nothing happened.

Is it always perfect? No. Tuning an anycast network is a little like balancing an egg on its end. It's possible, but not easy, and easy to perturb. Routing on the Internet unfortunately isn't based on the "shortest" path, but on how far away the destination is based on a few different metrics, so when we turn on a new location in somewhere like Tokyo, because our transit provider there happens to be well connected, suddenly half the traffic in Europe starts getting sent to Tokyo until we turn some knobs and try to make Frankfurt look more attractive to Europe than Tokyo again. The balancing act is that every time we add a new location, or even if we don't do anything and the topology of the rest of the Internet just changes, traffic might not get divided between sites like we'd really like it to, and we need to start reshuffling settings again.

Using NS-Global

Historically, to use NS-Global, you needed to know Javier, and email him to add your zones to NS-Global. But that was a lot of work for Javier, answering emails, and typing, and stuff, so we decided to automate it! I created a sign-up form for NS-Global, so anything hosting their own zone who want to use us as a secondary service can just fill in their domain name and what IP addresses we should pull the zone from.

The particularly clever part (in my opinion) is that things like usernames or logging in or authentication with cookies seem like a lot of work, so I decided that NS-Global wouldn't have usernames or login screens. You just show up, enter your domain, and press submit.

But people on the Internet are assholes, and we can't trust the Internet people to just let us have nice things, so we still needed some way to authenticate users as being rightfully in control of a domain before we would add or update their zone in our database; and that's where the RNAME field in the Start of Authority record comes in. Every DNS zone starts with a special record called the SOA record, which includes a field which is an encoded email address of the zone admin. So when someone submits a new zone to NS-Global, we go off and look up the existing SOA for that zone, and send an email to the RNAME email address with a confirmation link to click if they agree with whomever filled out our form about a desire to use NS-Global. Done.

This blog post is getting a little long, so I will probably leave other details like how we push new zones out to all the identical anycast servers in a few seconds or what BGP traffic engineering looks like to their own posts in the future. Until then, if you run your own DNS server and want to add a second name server as a backup, feel free to sign up at our website!